AdaptiveLearning

Adaptive learning using AI involves building systems that personalize educational content and pacing based on each learner’s strengths, weaknesses, preferences, and progress. Here’s a step-by-step guide on how to design and implement AI for adaptive learning:

📌 1. Define the Learning Objectives and Use Case

Subject area: e.g., Math, Language, Biology.

Target audience: K-12, college, working professionals, etc.

Format: exercises, videos, quizzes, interactive lessons.

📌 2. Collect and Prepare Educational Content

Structure content by topic and difficulty level.

Use tagged metadata: concepts, prerequisites, learning goals.

Format:

Questions and answers (MCQ, open-ended, etc.)

Explanatory content (text, video)

Assessments with outcomes

📌 3. Track Learner Interaction Data

Capture data such as:

Time spent on questions

Correct/incorrect answers

Number of hints used

Navigation patterns

Confidence (if self-reported)

This data becomes input for the AI model.

📌 4. Design Learner Models

Choose how to represent a learner’s knowledge state:

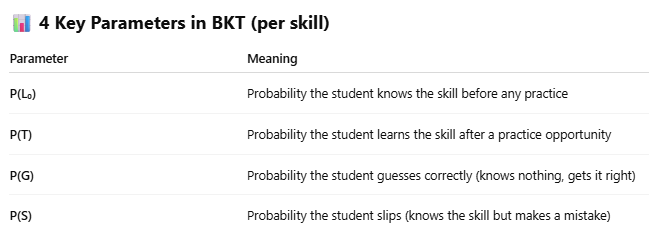

A. Bayesian Knowledge Tracing (BKT)

Track mastery of individual skills over time.

Probabilistic model: updates belief in learner proficiency after each response.

B. Item Response Theory (IRT)

Models probability of a correct answer based on:

Skill level of learner

Difficulty of question

C. Deep Learning Approaches

Deep Knowledge Tracing (DKT): RNN-based model that learns patterns in sequential student responses.

Transformer-based models (e.g., EduBERT) for richer understanding of student input (open-ended text, etc.)

📌 5. Implement Adaptivity Engine

Use AI model predictions to:

Recommend next content item.

Skip mastered concepts.

Revisit weak topics.

Adjust pace or format (text, video, example-based).

📌 6. Feedback and Explanation Layer

Provide:

Hints or scaffolding based on AI-detected struggle.

Explanations tailored to errors made.

Visualizations of progress.

📌 7. Reinforcement Learning (Optional Advanced Layer)

Model the learning path as a Markov Decision Process.

Agent (AI tutor) selects next action to maximize long-term learning gain.

Use techniques like Q-learning, DQN.

📌 8. Personalization Dimensions

Learning style (visual/audio)

Preferred pacing (fast/slow)

Engagement metrics

Motivation levels

📌 9. Tools & Platforms You Can Use

Content: OpenStax, Khan Academy, custom authoring

ML Frameworks: TensorFlow, PyTorch, Scikit-learn

LMS Integration: Moodle, Canvas, SCORM

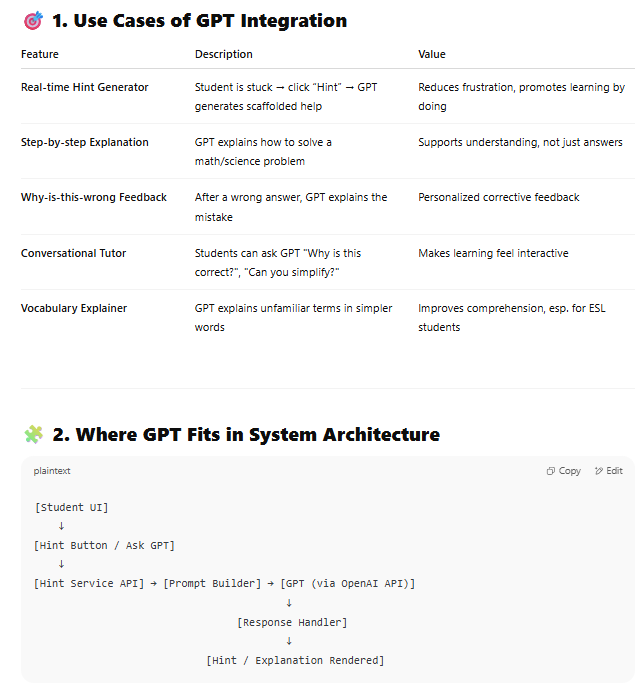

AI APIs: GPT for explanation, search, or chatbot support

📌 10. Evaluation & Continuous Improvement

A/B testing different adaptivity policies

Human-in-the-loop review

Student feedback loop

Metrics: retention, mastery rate, learning efficiency

✅ Example Architecture Diagram

cssCopyEdit

Student → UI (Web/App) → Event Tracker ↓ Learner Model (DKT/IRT) ← Content Repository ↓ Adaptivity Engine → Next Content / Feedback

If you're planning to build a prototype, I can help with:

Python code for knowledge tracing

GPT integration for hints/explanations

Backend architecture

UX flow for adaptive content delivery

Let me know your context (e.g., subject, student level, tech stack) and I’ll tailor further.

======================

A. Bayesian Knowledge Tracing (BKT) is one of the foundational models used in adaptive learning systems to estimate a student's knowledge of a concept over time, based on their interaction history (correct/incorrect answers). It's widely used in intelligent tutoring systems like Cognitive Tutor and forms the basis for more complex models like Deep Knowledge Tracing (DKT).

🔍 What is Bayesian Knowledge Tracing?

BKT models a student’s knowledge state as hidden (not directly observable) and uses Bayesian inference to update the belief about whether the student has "learned" a skill or not, based on observed responses.

Each skill or concept is modeled independently.

🔁 How BKT Works – Step by Step

Assume we are tracking a skill like "solving linear equations".

Initialize student’s knowledge with P(L₀)

After each question:

If student is correct: might be due to actual knowledge or a guess

If student is incorrect: might be due to lack of knowledge or a slip

Use Bayes’ theorem to update the belief of mastery

After each step, update the probability they’ve learned using P(T)

🔧 Bayesian Update Example

Suppose after 1 question the student got it correct.

We use:

csharpCopyEdit

P(L₁ | correct) = [P(L₀) × (1 - P(S))] / [P(L₀) × (1 - P(S)) + (1 - P(L₀)) × P(G)]

Then we update:

javaCopyEdit

P(L₁⁺) = P(L₁ | correct) + [1 - P(L₁ | correct)] × P(T)

This gives us the updated probability the student now knows the skill.

💡 Why BKT is Useful

Simple, interpretable

Real-time tracking of mastery per skill

Used to decide:

When to move on to harder content

When to repeat or remediate

When to recommend hints or scaffolded help

🧪 When to Use BKT

Early-stage MVP with limited data

You want interpretable knowledge state tracking

You can model your content into discrete skills

Real-time adaptation without deep ML overhead

Would you like:

Python implementation of BKT?

Comparison between BKT and DKT for your AI learning system?

Integration idea with your hint system?

Let me know how deeply you want to implement it.

You said:

tell more about Deep Learning Approaches

ChatGPT said:

Deep learning approaches for adaptive learning systems aim to model a student’s knowledge, behavior, and learning trajectory using powerful sequence models like RNNs, LSTMs, Transformers, etc. These methods overcome many of the limitations of classic models like BKT by learning directly from data and capturing nonlinear patterns, skill interdependencies, and time effects.

🔁 1. Deep Knowledge Tracing (DKT)

Introduced by Piech et al., 2015

Uses RNN (LSTM) to model the sequence of student interactions.

🔧 Input

Sequence of interactions: each is a tuple like (question ID, correct/incorrect)

🧮 Output

Predicts P(correct | next question)

🔍 Pros:

Learns temporal dependencies

No need to predefine skill labels

Better performance than BKT on complex datasets

⚠️ Cons:

Hard to interpret (black box)

Needs large dataset

Cannot easily give human-readable feedback

🧠 2. DKVMN (Dynamic Key-Value Memory Network)

Each “skill” is a key

The student's current knowledge state is stored in value memory

Can read/write knowledge as the student learns

🧠 Pros:

Explicit memory → more interpretable than DKT

Better at handling long-term dependencies

⚠️ Cons:

Needs large training data and careful tuning

🧲 3. SAKT (Self-Attentive Knowledge Tracing)

Based on Transformer attention mechanism (like GPT)

Attends to past responses when predicting the next answer

🎯 Strengths:

Good for long sequences

Attention map gives interpretability (e.g., what past question influenced current prediction)

✅ When to Use Deep Learning in EdTech

You have large student interaction data (>100K+ sequences)

You want high performance in prediction

You can handle black-box interpretability (or mitigate with attention/explanation modules)

You want to personalize learning paths deeply and dynamically

Would you like:

Code example of DKT or SAKT (PyTorch)?

Comparison table: BKT vs DKT vs SAKT?

Guidance on building your student interaction dataset?

============================

Modeling the learning path as a Markov Decision Process (MDP) means treating learning as a decision-making problem, where the system chooses which content or exercise to show next, based on a student's current knowledge and learning history — with the goal of maximizing long-term learning outcomes.

This approach comes from Reinforcement Learning (RL) and can be used to power truly adaptive tutoring systems.

🎓 Example in an EdTech System

Imagine a student going through math problems.

State: Student has mastered addition, weak in multiplication.

Action: System picks “multiplication level 2” question.

Reward: Student answers correctly → +1 reward.

Transition: Student moves to a more advanced knowledge state.

Goal: Maximize total learning gains over a course.

📘 Real Use Case:

Imagine a startup platform teaching coding:

The system models student states like

{basic loops, if/else, recursion}Each lesson = action

Reward = how quickly and correctly student completes the challenge

MDP learns which path helps students learn fastest and retain longest

🛠️ Implementation Considerations

You need student interaction data over time to train transitions & rewards

May start with simulated learners to bootstrap the model

Define reward carefully: is it short-term correctness? or long-term retention?

Can be combined with Knowledge Tracing (state estimation)

📦 Tools & Libraries

OpenAI Gym: customize environments for learning scenariosStable-Baselines3: standard RL algorithms in PythonRLlib (Ray): scalable RL for productionUse PyTorch/TensorFlow for deep RL (e.g., DQN or Actor-Critic)

Would you like:

A code example of MDP or RL for learning path?

A sample simulation of student learning as an environment?

Help with defining state/action/reward for your use case?

Let me know — I can tailor the next step for your EdTech AI team.

You said:

What is Item Response Theory (IRT)

ChatGPT said:

Item Response Theory (IRT) is a statistical framework used in education and psychometrics to model the relationship between a student's latent ability (what they truly know) and their probability of correctly answering test items (questions).

IRT is foundational in adaptive testing, like the GRE, GMAT, or computer-based educational systems.

🧠 Core Idea

IRT assumes that each question (item) has certain characteristics, and that a student's performance depends on both:

The student's ability level (denoted as θ, "theta")

The item’s difficulty and discrimination

It helps answer:

➡️ “Given a student’s skill, what is the probability they’ll get this question right?”

➡️ “Given their responses, what is the likely skill level?”

📊 Key Components of IRT

1. θ – Ability Level

A real number (e.g. -3 to +3)

Higher θ means more knowledge/skill

2. Item Parameters

There are different IRT models depending on how many parameters you consider for each item:

🛠️ Tools / Libraries

pyirt– Python IRT modelsmirt(R) – Multidimensional IRTCatSim– Simulations for computerized adaptive testingPyMC,TensorFlow Probability– for Bayesian IRT

📘 Example

If a student with θ = 1.5 is presented with:

Easy question (b = -1): high chance of getting it right

Hard question (b = 2.0): lower chance, but if they get it right → system raises their θ estimate

Would you like a:

Python code example of IRT?

Integration with your EdTech AI adaptive model?

Comparison between IRT and DKT (Deep Knowledge Tracing)?

Let me know your next step!

===========================

Here’s a complete UX flow for an adaptive learning system, designed to personalize learning experiences in real time. The flow ensures that learners get the right content at the right time based on their performance, behavior, and preferences.

✅ Overview of UX Flow Stages

1. Onboarding & Setup

2. Diagnostic Assessment

3. Learning Dashboard

4. Personalized Content Delivery Loop ├─ Content Delivery ├─ Real-Time Assessment ├─ Adaptation Engine └─ Feedback & Explanation

5. Progress Tracking & Motivation

6. Review & Mastery Reinforcement🧩 1. Onboarding & Setup

Goal: Understand the learner’s goals, background, and preferences.

UI Elements:

Account creation/login

Learning goal selection (e.g., "Improve math skills for entrance exam")

Skill level self-assessment

Optional learning style preference (visual/audio/text)

Daily/weekly learning time commitment

🧪 2. Diagnostic Assessment (Initial Placement Test)

Goal: Estimate the learner’s current skill level.

UI Elements:

Short, adaptive quiz (~5–10 questions)

Progress bar with confidence estimation

Real-time hint option (GPT-based explanation if stuck)

Output: Learner profile initialized — topic proficiency map.

📊 3. Learning Dashboard

Goal: Let users explore, plan, and track learning paths.

UI Elements:

Personalized skill map or learning tree

Progress bars per topic

“Next recommended step” button

Tabs: Learn | Review | Goals | Settings

🔁 4. Adaptive Learning Loop

A. Content Delivery

Goal: Present new content in a form that matches the learner’s style.

UI Elements:

Card view: Concept + interactive explanation

Option to switch format (video, diagram, quiz)

B. Real-Time Micro-Assessment

Goal: Test understanding after short content bursts.

UI Elements:

1–3 short questions after each concept

Confidence rating slider (e.g., "How sure are you?")

Immediate feedback (correct/incorrect)

C. Adaptation Engine (Backend)

Goal: Update learner model and decide next action.

If confident + correct → go to next level/topic

If unsure or incorrect → provide:

Hints → Simpler examples → Retry

Or fallback content (e.g., review prerequisite concept)

D. Feedback & Explanation

Goal: Reinforce learning or correct misunderstandings.

UI Elements:

GPT-generated explanations for wrong answers

“Why is this right?” toggle

Hints in tiers (light → medium → full)

📈 5. Progress Tracking & Motivation

Goal: Keep learners engaged with visible results.

UI Elements:

Daily streaks

XP / points system

Badges: “Mastered Fractions”, “Streak Learner”, etc.

Weekly performance report (with charts)

🔁 6. Review & Mastery Reinforcement

Goal: Space repetition and concept reinforcement.

UI Elements:

Review tab with:

“Weakest 3 concepts this week”

“Quick Review Mode” (flashcards, quizzes)

Mastery badges after multiple confirmations

🧠 Optional UX Enhancements

Chatbot tutor: “Ask a mentor” powered by GPT

Voice interaction: Read content out loud or accept answers via voice

Smart reminders: Notifications with personalized timing

🔧 Wireframe Sketch Summary

If you’d like, I can mock up this UX flow as a Figma wireframe or a React prototype. Or we can tailor it for a specific age group (e.g., high school STEM learners). Would you like to continue in that direction?

================================

Integrating GPT (like ChatGPT or GPT-4) into your adaptive learning system can dramatically improve user experience, especially for real-time hints, explanations, and personalized tutoring. Here’s a breakdown of how you can integrate GPT for hints/explanations in your EdTech AI product:

🧠 3. Prompt Engineering Examples

Hint Prompt:

“A student is solving this question: ‘If 3x + 5 = 14, what is x?’ Give them a helpful hint without solving it.”

Explain Why Wrong:

“A student answered 12 for the question: ‘What is 4 × 3?’ The correct answer is 12. The student's answer is incorrect. Give a polite explanation why.”

Simplify Concept:

“Explain Newton’s First Law of Motion to a 12-year-old using simple words and a real-world example.”

Tutor Chat Prompt (context-aware):

“Act like a helpful math tutor. A student is asking: ‘Why do we divide both sides of the equation?’ The problem is: 2x = 10.”

⚙️ 4. Technical Notes

Use OpenAI API (GPT-3.5/GPT-4 or fine-tuned model)

Structure data flow to include:

Student context (e.g., skill level)

Question metadata (e.g., topic, standard, difficulty)

Chat history (optional for continuity)

Set output constraints: max tokens, tone, language level

🧪 5. Optional Enhancements

Multilingual Support: Ask GPT to output hints in the student’s preferred language (e.g., Vietnamese, Thai)

Audio Hints: Convert GPT text to voice (TTS) for younger learners

Inline Explanations: Highlight each step in multi-step problems

✅ 6. GPT Hint Policy (To Avoid Overuse)

Use a token system: Students get 3–5 hints/day unless premium

Encourage reflection before asking: Add "Try again" delay

Track hint usage → analyze struggle patterns

Would you like help drafting:

Specific GPT prompts per subject (e.g., biology, physics)?

System architecture diagram for your dev team?

Backend integration code sample (e.g., Python + OpenAI API)?

Let’s design this smartly for your MVP.

🔐 Security & Privacy

Use OpenAI’s moderation API to filter outputs (optional but recommended)

Mask PII before sending to GPT

Rate limit hint usage per user/session

Use HTTPS for all API calls

Here’s a backend integration code sample using Python + FastAPI + OpenAI API that you can use to generate hints or explanations in your adaptive learning system.

✅ Sample: FastAPI Backend for GPT Hints

📁 File structure

bashCopyEdit

/gpt_hint_service │ ├── main.py # FastAPI entry point ├── prompt_builder.py # Hint prompt logic └── requirements.txt # Dependencies

📄 requirements.txt

txtCopyEdit

fastapi openai uvicorn python-dotenv

📄 .env (DO NOT COMMIT TO GIT)

envCopyEdit

OPENAI_API_KEY=sk-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

📄 prompt_builder.py

pythonCopyEdit

def build_hint_prompt(question: str, topic: str = "", student_level: str = "middle school") -> str: return ( f"A student is trying to solve the following question in {topic}:\n" f"'{question}'\n" f"The student is stuck. Give a helpful hint without giving the final answer.\n" f"Make it appropriate for a {student_level} learner.\n" )

📄 main.py

pythonCopyEdit

from fastapi import FastAPI, HTTPException from pydantic import BaseModel from dotenv import load_dotenv import openai import os from prompt_builder import build_hint_prompt # Load API key from .env load_dotenv() openai.api_key = os.getenv("OPENAI_API_KEY") app = FastAPI() class HintRequest(BaseModel): question: str topic: str = "math" student_level: str = "middle school" class HintResponse(BaseModel): hint: str @app.post("/generate-hint", response_model=HintResponse) async def generate_hint(req: HintRequest): try: prompt = build_hint_prompt(req.question, req.topic, req.student_level) response = openai.ChatCompletion.create( model="gpt-4", # or gpt-3.5-turbo for cost-efficiency messages=[ {"role": "system", "content": "You are a helpful AI tutor."}, {"role": "user", "content": prompt} ], max_tokens=100, temperature=0.7, ) hint_text = response['choices'][0]['message']['content'].strip() return HintResponse(hint=hint_text) except Exception as e: raise HTTPException(status_code=500, detail=str(e))

▶️ Run the server

bashCopyEdit

uvicorn main:app --reload

Visit: http://localhost:8000/docs

Swagger UI auto-generates interactive API documentation from your FastAPI code.

📬 Example API Call

POST /generate-hint

Body:

jsonCopyEdit

{ "question": "What is the area of a triangle with base 10 and height 5?", "topic": "geometry", "student_level": "grade 6" }

Response:

jsonCopyEdit

{ "hint": "Remember, the formula for the area of a triangle is (base × height) ÷ 2. Try plugging in the numbers." }

🔒 Notes on Production

Add rate limiting (e.g., with Redis or token-based cooldowns)

Add OpenAI output filtering or moderation if needed

Log GPT usage for analytics/debugging

Would you like me to:

Extend this with user login/session tracking?

Add a frontend React call example?

Enable multi-language output?

Just let me know what your stack and user flow looks like.

================================

Setting up a startup to build an adaptive learning system can be done leanly at first, but the number of people you need depends on:

Product scope (MVP or full SaaS)

Target user (K-12, college, enterprise)

Depth of AI (rules-based, ML, or deep adaptive models)

Content creation: Do you use existing material or generate your own?

Here’s a realistic lean startup team structure for the first 6–12 months, focused on building a solid MVP:

👥 Core Team (6–10 people)

1. Founders (1–2)

CEO/PM: Vision, product-market fit, user interviews, roadmap.

CTO (or Technical Cofounder): Architecting, AI stack, platform choices.

2. AI Engineer / Data Scientist (1–2)

Implements learner model (BKT, DKT, IRT)

Develops adaptation algorithm

Works on model fine-tuning (PyTorch, TensorFlow)

May also integrate GPT for explanation/hints

3. Full-Stack Developer (1–2)

Builds the web/app frontend and backend

Implements API endpoints, auth, dashboards

Integrates content delivery + AI decision engine

4. UX/UI Designer (1)

Designs learning flows, dashboards, mobile/desktop interface

Conducts user interviews and usability testing

5. Content Specialist (1–2)

Tags and structures content by skill/concept

Writes or curates assessments, feedback, explanations

Optional: works with GPT to auto-generate quiz questions or explanations

6. Growth Hacker / Marketing (1)

Creates landing page, early growth strategy, onboarding funnel

Tests acquisition channels (e.g., teachers, parents, schools)

💡 Optional Roles (Phase 2)

Once you start growing or have funding:

Educational Psychologist (for curriculum validity)

QA/Tester

Customer Support/Community Manager

Sales or B2B Partnerships

Mobile Dev (if building native apps)

🛠️ Tools & Stack to Reduce Team Size

No-code CMS (e.g., Notion + APIs, Firebase)

GPT + LangChain for question generation, explanations

Ready learner model (e.g., DKT from open source)

LMS plugin (e.g., Moodle or Canvas integration) if you don't want to build from scratch

=======================

Great! Here’s how I can help you build a compelling pitch deck for an EdTech AI startup focused on adaptive learning:

✅ Suggested Pitch Deck Structure (10–12 slides)

Title Slide

Startup name, tagline

Logo + your name and contact info

Problem

Traditional education is one-size-fits-all

Students learn at different paces → many fall behind or get bored

Teachers can’t personalize content at scale

Solution

Your AI-powered Adaptive Learning Platform

Real-time content personalization based on learner behavior

Data-driven progress tracking + mastery-based advancement

Product

Key features:

Diagnostic assessment

Content adaptation

Rule-based + AI fallback logic

Teacher dashboard

Demo or screenshots (mockups okay for early-stage)

Market Opportunity

Global EdTech market size ($XXB)

Online K-12, tutoring, corporate training segments

TAM / SAM / SOM if available

Technology

AI/ML engine: personalization, prediction, content sequencing

Curriculum engine: modular content blocks + metadata

Scalable backend (cloud, data pipeline)

Business Model

B2C: Subscription (monthly/yearly)

B2B: Schools/licensing model

Freemium + premium content

Traction (or Roadmap if pre-revenue)

Pilot with X schools / Y users / partnerships

Results: retention, engagement, performance boost

If early: roadmap of MVP → Pilot → Paid Users

Go-to-Market Strategy

Channels: partnerships, influencers, school districts, online ads

Growth loops: referrals, teacher endorsements, leaderboard

Team

Founders: EdTech, AI, UX expertise

Advisors: educators, former school admins, AI researchers

Ask

How much funding? (e.g., $500k pre-seed / $1.5M seed)

Use of funds: team, content dev, AI infra, pilots

Vision / Closing

"We believe every student deserves a learning path that works for them."

Reimagine personalized education at scale.

======================

Slide 1: Title Slide Startup Name: LearnFlow AI

Tagline: Personalized Learning. At Scale.

Your Name | Founder & CEO

Email | Website | Date

Slide 2: The Problem

Education today is largely one-size-fits-all.

Students learn at different speeds and styles, but classrooms can’t adapt in real time.

Teachers are overwhelmed, under-resourced, and unable to personalize content for each student.

As a result, many students fall behind or disengage.

Slide 3: The Solution LearnFlow AI is an adaptive learning platform powered by artificial intelligence.

Delivers real-time personalized content based on student performance and engagement.

Supports mastery-based progression, ensuring no student is left behind.

Provides teachers with insights and recommendations to guide interventions.

Slide 4: The Product Key Features:

AI-powered diagnostic assessments

Personalized content sequencing

Reinforcement through adaptive quizzes and spaced repetition

Educator dashboard for monitoring and intervention

MVP ready by Q1 2026. Pilot version in development.

Slide 5: Market Opportunity

Global EdTech market: $400B+ by 2027 (HolonIQ)

K-12 and after-school tutoring alone: $100B+

Shift to hybrid/digital learning post-COVID

Increasing focus on personalized, measurable outcomes

Slide 6: Technology

AI Engine: Learner modeling, content recommendation, real-time adaptation

Content Graph: Tagged and structured curriculum blocks

Fallback Logic: Rule-based safeguards for high uncertainty

Architecture: Cloud-native, scalable backend with privacy-first design

Slide 7: Business Model

B2C: Subscription (monthly/yearly tiers for parents/students)

B2B: Licensing model for schools & tutoring centers

Optional: Freemium model with in-app purchases for advanced modules

Slide 8: Traction / Roadmap

Early interest from 3 schools (2 in Vietnam, 1 international)

MVP content aligned to Grade 6-9 science & math curriculum

Target: 500+ student pilot Q2 2026

Slide 9: Go-To-Market Strategy

Partner with schools and private tutoring centers for pilots

Social media and influencer outreach to parents

Strategic EdTech partnerships for bundled offerings

Leverage performance data to drive word-of-mouth growth

Slide 10: Team

Founder: [Your Name] – Background in AI & Education

CTO: [TBD] – Experience with scalable learning platforms

Advisors: Former educators, EdTech investors, AI researchers

Slide 11: The Ask

Raising $500,000 pre-seed to:

Complete MVP & pilot (50%)

Content development (30%)

Hire AI/Backend engineers (20%)

Runway: 12-15 months to hit 5K users & ARR traction

Slide 12: Vision / Closing We believe every learner deserves a personalized path to success.

LearnFlow AI is reimagining education using the power of adaptive technology.

Join us in building the future of learning.

=================

A roadmap for MVP (Minimum Viable Product) is a step-by-step plan that outlines how your team will go from idea to a working, testable version of your product with the minimum features necessary to deliver value and collect feedback.

✅ 1. Purpose of an MVP Roadmap

Align team and investors on what will be built and when

Focus on core features only — avoid “feature creep”

Ensure fast feedback from early users

Plan for iterative development and learning

✅ 2. Typical MVP Roadmap Phases (for EdTech AI startup)

Phase 0: Research & Validation (Month 0–1)

Define target user: student/parent/teacher

Validate problem: interviews, surveys

Study curriculum standards (e.g., Grades 6–9 science, math)

Identify key adaptive learning needs

Phase 1: Scope MVP (Month 1–2)

Define core user flows:

Student login → assessment → adaptive content → progress

Finalize feature list for MVP (see next section)

Choose tech stack: backend, frontend, AI engine

Build team (CTO, AI engineer, content designer...)

Phase 2: MVP Build (Month 2–5)

Build content backend: store learning modules with metadata

Develop adaptive engine v1 (rule-based or AI-lite)

Frontend: basic learner dashboard + quiz player

Create initial content (e.g., 10–15 lessons in math/science)

Integrate user tracking & analytics

Internal testing & bug fixing

Phase 3: Pilot Launch (Month 5–6)

Partner with 1–2 schools or tutoring centers

Onboard 50–100 students

Track engagement, accuracy, feedback

Gather qualitative and quantitative data

Phase 4: Iterate (Month 6–7)

Refine recommendation engine based on usage

Adjust content flow, difficulty adaptation

Add teacher/parent dashboard if needed

Prioritize features for V1.0

🛠️ Tools / Libraries

pyirt– Python IRT modelsmirt(R) – Multidimensional IRTCatSim– Simulations for computerized adaptive testingPyMC,TensorFlow Probability– for Bayesian IRT

📘 Example

If a student with θ = 1.5 is presented with:

Easy question (b = -1): high chance of getting it right

Hard question (b = 2.0): lower chance, but if they get it right → system raises their θ estimate

Would you like a:

Python code example of IRT?

Integration with your EdTech AI adaptive model?

Comparison between IRT and DKT (Deep Knowledge Tracing)?

Let me know your next step!

🧩 Noteworthy Technologies Used

Bayesian Knowledge Tracing (BKT)

Deep Knowledge Tracing (DKT)

Item Response Theory (IRT)

Markov Decision Process (for planning learning paths)

Large Language Models (like GPT)

Would you like a competitive analysis table, or help defining your unique differentiation strategy if you're starting your own adaptive learning company?